Disclosure: This post includes affiliate links; I may receive compensation if you purchase products or services from the different links provided in this article.

image_credit - DesignGuru.io

Hello friends, Caching is not just an important topic for System design interviews, its also technique in software development, enabling faster data retrieval, reducing load times, and enhancing user experience.

For developers, mastering caching concepts is crucial as it can significantly optimize application performance and scalability.

In the past, I have talked about common system design questions like API Gateway vs Load Balancer and Horizontal vs Vertical Scaling, Forward proxy vs reverse proxy as well common System Design problems and in this article we will explore the fundamentals of caching in system design and learn different caching strategies that are essential knowledge for technical interviews.

It's also one of the essential System design topics for interview and you must prepare it well.

In this article, you will learn ten essential caching concepts, ranging from client-side and server-side strategies to more advanced techniques like distributed caching and cache replacement policies

So what are we waiting for? let's start

By the way, if you are preparing for System design interviews and want to learn System Design in depth then you can also checkout sites like ByteByteGo, Design Guru, Exponent, Educative and Udemy which have many great System design courses and a System design interview template like this which you can use to answer any System Design question.

If you need more choices, you can also see this list of best System Deisgn courses, books, and websites

P.S. Keep reading until the end. I have a free bonus for you.

What is Caching? Which data to Cache? Where to Cache?

While designing distributed system, caching should be strategically placed to optimize performance, reduce latency, and minimize load on backend services.

Caching can be implemented at multiple layers like

Client-Side Cache

This involves storing frequently accessed data on the client device, reducing the need for repeated requests to the server. It is effective for data that doesn't change frequently and can significantly improve user experience by reducing latency.Edge Cache (Content Delivery Network - CDN)

CDNs cache content at the edge nodes closest to the end-users, which helps in delivering static content like images, videos, and stylesheets faster by serving them from geographically distributed servers.Application-Level Cache

This includes in-memory caches such as Redis or Memcached within the application layer. These caches store results of expensive database queries, session data, and other frequently accessed data to reduce the load on the database and improve application response times.Database Cache

Techniques such as query caching in the database layer store the results of frequent queries. This reduces the number of read operations on the database and speeds up data retrieval.Distributed Cache

In a distributed system, a distributed cache spans multiple nodes to provide high availability and scalability. It ensures that the cached data is consistent across the distributed environment and can handle the high throughput required by large-scale systems.

When designing a caching strategy, it's crucial to determine what data to cache by analyzing usage patterns, data volatility, and access frequency.

Implementing an appropriate cache eviction policy (such as LRU - Least Recently Used, or TTL - Time to Live) ensures that stale data is purged, maintaining the cache's relevance.

Moreover, considering consistency models and cache invalidation strategies is vital to ensure that cached data remains accurate and up-to-date across the system.

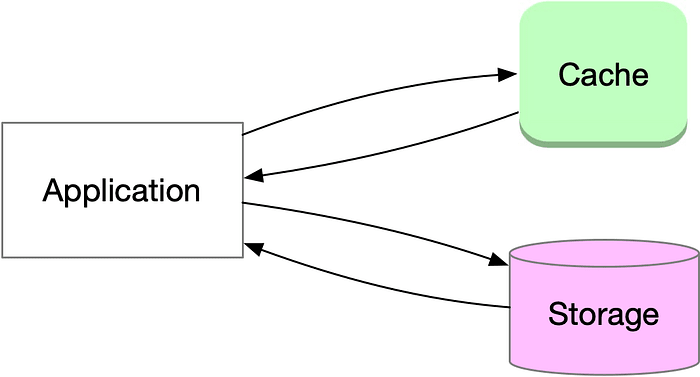

And, here is a nice diagram on caching from DesignGuru.io to illustrate what I just said.

10 Caching Basics for System Design Interview

Here are 10 essential caching related basics and concepts every programmer must know before going for any System design interview.

1) client-side caching

Client-side caching is a fundamental technique where data is stored on the user's device to minimize server requests and improve load times. Two primary methods include:

- Browser Cache: Stores resources like CSS, JavaScript, and images locally to reduce page load times on subsequent visits.

- Service Workers: Enable offline access by caching responses, allowing applications to function without an internet connection.

In short:

- browser cache: stores CSS, js, images to reduce load time\

- service workers: enable offline access by caching response

Here is how client side caching looks like:

2) server-side caching

This is another type of caching which involves storing data on the server to expedite response times for user requests.

Key strategies include:

- Page Caching: Saves entire web pages, allowing faster delivery on subsequent requests .

Fragment Caching:

Caches specific parts of a page, such as sidebars or navigation bars, to enhance loading efficiency.Object Caching:

Stores expensive query results to prevent repeated calculations

In short:

- page caching: cache the entire web page

- fragment caching: cache page components like sidebars, navigation bar\

- object caching: cache expensive query results

Here is how server side caching looks like:

image_credit --- ByteByteGo

3) Database caching

Database caching is crucial for reducing database load and improving query performance. Important techniques include:

Query Caching:

Stores the results of database queries to quickly serve repeat requests.Row Level Caching:

Caches frequently accessed rows to avoid repeated database fetches.

In short:

- query caching: cache db query results to reduce load

- row level caching: cache popular rows to avoid repeated fetches

Here is an example of database caching on AWS:

4) application-level caching

Application-level caching focuses on caching within the application to reduce computation and data retrieval times. Strategies include:

- Data Caching: Stores specific data points or entire datasets for quick access.

- Computational Caching: Caches the results of expensive computations to avoid repeated processing.

In short:

- data caching: cache specific data points or entire datasets\

- computational caching: cache expensive computation results to avoid recalculation

5) Distributed caching

Distributed caching enhances scalability by spreading cache data across multiple servers, allowing high availability and fault tolerance.

In short, this type of caching just spreads cache across many servers for scalability

Here is how a distributed cache with Redis looks like:

6) CDN

Content Delivery Networks (CDNs) are used to cache static files close to users via edge servers, significantly reducing latency and speeding up content delivery.

In short, CDN store static files near users using edge servers for low latency

Also, here is a nice diagram on how CDN Works by DeisgnGuru.io

7) cache replacement policies

Cache replacement policies determine how caches handle data eviction. Common policies include:

- Least Recently Used (LRU): Evicts the least recently accessed items first.

- Most Recently Used (MRU): Evicts the most recently accessed items first.

- Least Frequently Used (LFU): Evicts items that are accessed least often.

In short:

- LRU: removes the least recently accessed items first\

- MRU: removes the most recently accessed items first\

- LFU: removes items that are accessed least often

8) hierarchical caching

Hierarchical caching involves multiple cache levels (e.g., L1, L2) to balance speed and storage capacity. This model is quit popular on CPU.

In short:

- caching at many levels (L1, L2 caches) for speed and capacity

9) cache invalidation

Cache invalidation ensures that stale data is removed from the cache. Methods include:

- Time-to-Live (TTL): Sets an expiry time for cached data.

- Event-based Invalidation: Triggers invalidation based on specific events or conditions.

- Manual Invalidation: Allows developers to manually update the cache using tools.

In short:

- TTL: set expiry time\

- event based: invalidate based on events or conditions\

- manual: update cache using tools

Here is a nice System design cheat sheet about cache invalidation methods by DesignGuru.io to understand this concept better:

10) caching patterns

Finally, caching patterns are strategies for synchronizing cache with the database. Common patterns include:

- Write-through: Writes data to both the cache and the database simultaneously.

- Write-behind: Writes data to the cache immediately and to the database asynchronously.

- Write-around: Directly writes data to the database, bypassing the cache to avoid cache misses on subsequent reads.

In short:

- write-through: data is written to the cache and the database at once\

- write-behind: data is written to the cache and asynchronously to database\

- write-around: data is written directly to the database, bypassing the cache

Here is another great diagram to understand various caching strategies, courtesy DesignGuru.io, one of the best place to learn System Design.

Conclusion

That's all about 10 essential Cache related concepts for System deisgn interview. Caching can improve the performance and scalability of your application. So use it carefully. Understanding and implementing these caching concepts can significantly enhance application performance, scalability, and user satisfaction.

Bonus\

As promised, here is the bonus for you, a free book. I just found a new free book to learn Distributed System Design, you can also read it here on Microsoft --- https://info.microsoft.com/rs/157-GQE-382/images/EN-CNTNT-eBook-DesigningDistributedSystems.pdf

Top comments (1)

Great post! Thank you